AI Security

sAIfer Lab studies how to test Artificial Intelligence and make it more secure.

The research on AI Security, carried on at Pra Lab and SmartLab both, emerged after the discovery of vulnerabilities specific to AI systems. In domains such as Cybersecurity, securing AI against these issues is essential to provide reliable and resilient systems. Thus, research in AI security is important for designing technologies that can withstand evolving adversarial tactics. This research area includes the following three main sub-topics.

AI Attacks and Defenses: in this subtopic, we study the mechanisms behind evasion and poisoning attacks. The former produce carefully-crafted input manipulations that cause errors in the system. The latter study how to inject samples in the training data to cause a high error or specific malicious behaviors in the model after training.

Secure AI for Cybersecurity: our research designs AI-driven cybersecurity solutions to detect threats and vulnerabilities. Applications include the study of AI-based web security solutions to detect attacks coming from malicious users, malware detectors able to block these threats before they are executed, and spam filtering tools used to block undesired and potentially dangerous emails in the users’ electronic mailboxes.

Large Language Models Security: we explore and develop techniques to assess the robustness of Large Language Models, integrating security measures to ensure that these powerful tools can be safely and effectively used.

sAIfer Lab provide tools and solutions to advance research on these topics, including:

SecML-Torch, a PyTorch-powered Python library to assess the security evaluation of AI/ML technologies against evasion and poisoning attacks;

an extension of the previous library, called SecML Malware, ad-hoc for attacking Windows malware detectors;

AttackBench, a benchmark framework for fairly comparing gradient-based attacks and developed to identify the most reliable one to use for robustness verification.

To share our research results and train researchers on these topics, we released our free online course on ML Security, and we recurrently organize the MLSec Seminars, a series of events in which we invite researchers to talk about innovations and recent advancements in Machine Learning security to involve both academics and industrial researchers.

Research Topics

Active research projects

LAB DIRECTOR

Fabio Roli - Full Professor

RESEARCH DIRECTORS

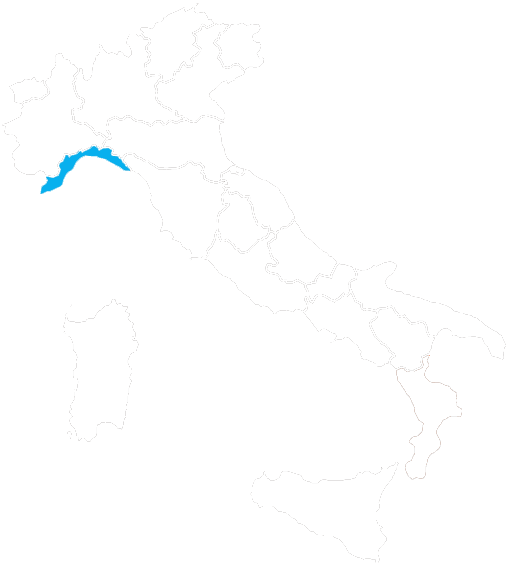

Battista Biggio - Full Professor

Antonio Cinà - Assistant Professor

Luca Demetrio - Assistant Professor

FACULTY MEMBERS

Fabio Brau - Assistant Professor

Ambra Demontis - Assistant Professor

Maura Pintor - Assistant Professor

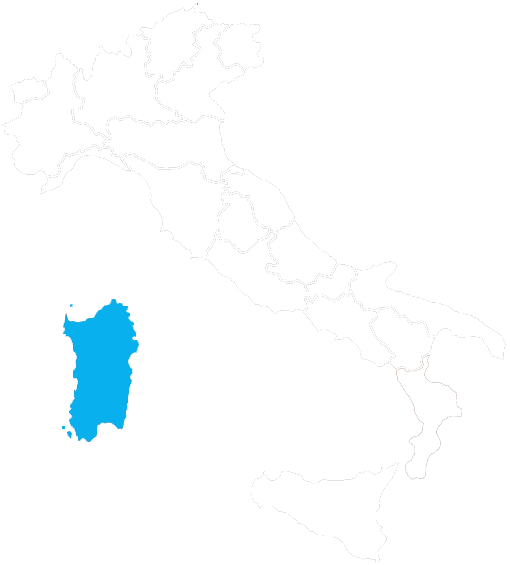

Angelo Sotgiu - Assistant Professor

POSTDOCS

Wei Guo

PhD STUDENTS

Daniele Angioni

Chen Dang

Hicham Eddoubi

Giuseppe Floris

Daniele Ghiani

Srishti Gupta

Dario Lazzaro

Luca Minnei

Fabrizio Mori

Raffaele Mura

Giorgio Piras

Christian Scano

Luca Scionis

Dmitrijs Trizna

RESEARCH ASSOCIATES

Sara Repetto

Jinhua Xu